Secure Medical Devices with VMware SD-WAN and NSX

German version here: https://www.securefever.com/blog/secure-medical-devices-with-vmware-sd-wan-and-nsx-mdtpt

In the last years we have seen a lot of cyber attacks like ransomware, malware, trojaner, etc. within the healthcare sector. In the recent past a ransomware attack to the Irish medical healthcare system (https://www.bbc.com/news/world-europe-57197688) has happened. The main hacking targets are the medical devices like Infusion pumps, Computertomographs (CT), Magnetic resonance imaging (MRT), PACS systems (Picture Archiving and Communication System), etc.

Why medical devices a popular target for hackers?

There are several reasons for this. The medical devices have often security vulnerabilities and the patches are not up-to-date because there is different hardware, special operation and application systems in place. It is not just to think about how can I secure a dedicated Windows or Linux OS version. Hospitals or clinics have to manage a lot of different software and end devices. Medical devices use special protocols like DICOM (Digital Imaging and Communications in Medicine) and often it is not allowed from regulation perspective (i.e. ISO standards, BSI certifications, etc.) to make changes on this appliances.

Another main reason why medical devices are a popular target for hackers is the high cyber security risk. An impact of a attack within the healthcare sector can be really critical. The result can be end in a outage of medical devices, danger for the patient, loss of personal patient data or interruptions within the daily hospital work. The Covid-19 pandemic makes this more real and critical.

What are the use cases for SD-WAN and NSX?

Before I will describe how VMware secures medical devices, I want to provide an overview about the common use cases for VMware SD-WAN and NSX.

VMware SD-WAN optimizes WAN traffic with dynamic path selection. The solution is transport-independent (Broadband, LTE, MPLS), simple to configure and manages and provides a secure overlay (see picture 1). VMware SD-WAN use Dynamic Multi-Path Optimization (DMPO) technology to deliver assured application performance and uniform QoS mechanism across different WAN connections. DMPO has 4 key functionalities - continuous monitoring, dynamic application steering, on-demand remediation and application-aware overlay QoS.

Picture 1: VMware SD-WAN Overview

DMPO can improve line quality for locations with two or more connections like MPLS, LTE, leased lines, etc. The WAN optimization is also useful for locations with a single WAN connection.

NSX Software-Defined Networking solution is designed to provide Security, Networking, Automation and Multi-Cloud Connectivity for Virtual Machines, Container and Baremetal Server.

NSX-T provides Networking function with GENEVE Overlay technology to realize switching and routing on a distributed hypervisor level. NSX is capable for Multi-hypervisor support (ESXi, KVM) and has a policy-driven configuration platform. Network services like BGP and OSPF for North-south routing, NAT, Load Balancing, VPN, VRF-LITE, EVPN, Multicast, L2 Bridging and VPN can be implemented with NSX.

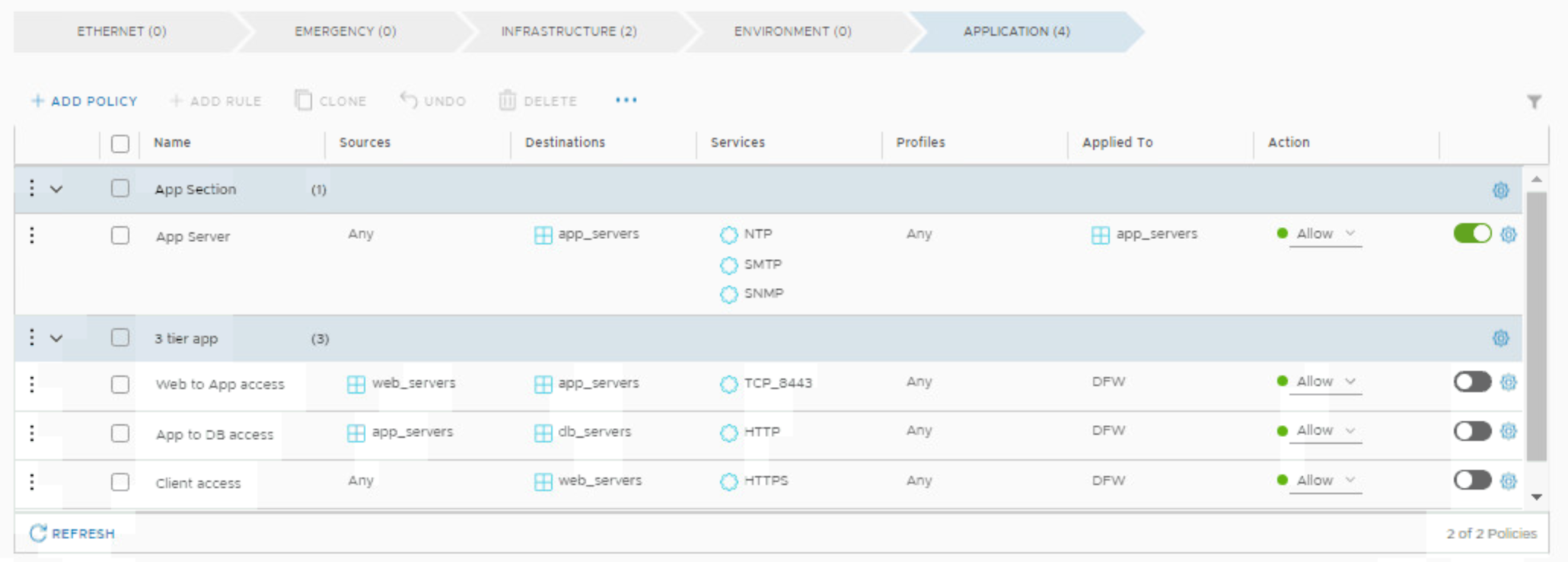

Security with Micro-segmentation is the key driver for NSX. The distributed firewall is sitting in front of every VM and east-west security can be realized without dependencies to IP address ranges or VLAN`s technology. A Firewall, Deep-Paket-Inspection (DPI) and Context Engine on every hypervisor are realizing an high performance service defined firewall on L7 stateful firewalling basis. ESXi Hosts don`t need a agent for Micro-Segmentation, the existing ESXi mangement connection is used to push firewall rules.

IDPS (Intrusion Detection and Prevention System) is also possible since NSX-T version 3.x, including a distributed function (see for more details my blog post https://www.securefever.com/blog/nsx-t-30-ids). Other security feature like URL Analyses, Gateway Firewall for North/South Security and third partner vendors security integrations to NSX are as well included.

Multi-cloud networking is also a use case for NSX. This could be between private data centers to design disaster recovery or high availability network and security solutions or for hybrid public cloud connectivity.

Container Networking and Security is another important use case for NSX. This can be achieved with a dedicated NSX NCP Container Plugin or from the recent past with a new VMware created open standard CNI (Container Network Interface) named Antrea.

Check for more detail information about NSX the VMware NSX-T Reference Design guide for NSX-T version 3.x https://communities.vmware.com/docs/DOC-37591 and the NSX-T Security Reference Guide https://communities.vmware.com/t5/VMware-NSX-Documents/NSX-T-Security-Reference-Guide/ta-p/2815645

How can VMware SD-WAN and NSX can help to secure medical devices?

The combination of SD-WAN and NSX makes the whole solution unique. SD-WAN hardware Edge boxes are taking care about the initial access of the medical devices and the secure transport connection to the data center. Within the data center NSX is the feature to secure the medical devices servers independently if it is a virtual machine, bare metal server or container based solution.

The solution is simple to install and operate, all SD-WAN components (hardware and software) will be managed from the SD-WAN Orchestrator. It is flexible, i.e. global policies can be configured. Everything within the data center is software based and it is easy to scale. A NSX Manager cluster is configured for the management and control plane for NSX within the data center.

1. SD-WAN Edge device in front of the medical device

The first access point or default gateway of the medical devices is the Edge SD-WAN hardware component (see picture 2). It is also possible to place a L2 switch or WLAN access controller behind the Edge if you have more medical devices on a area. A firewall on the SD-WAN Edge handles the initial access security and the connections between different medical devices behind the same SD-WAN Edge.

Picture 2: Network Topology

2. Secure transport connection

The SD-WAN Edge will establish a dedicated tunnel to a SD-WAN Edge in the data center. The local area network (LAN) builds the transport network. The VeloCloud Multi-Path Protocol (VCMP) is used to establish an IPSec-secured transport tunnel over port UDP 2426. The SD-WAN Edge in the data center could be also hardware based but the easiest way is to create it on VM-based factor basis. The VM has one WAN interface to establish the IPSec endpoint and one LAN interface to have connection to the medical devices virtual machines or Bare Metal like DICOM or PACS server. If medical devices needs to talk to other medical devices on different SD-WAN Edges the IPSec tunnel can be created also directly without a redirection to the data center Edge.

3. SD-WAN within the Data Center with handover to NSX

If no NSX Overlay (Routing & Switching) is configured within the data center, the existing vSphere Networking implementation (see picture 3) can be used. All NSX security feature can be implemented without a change on the routing and switching area. The VM`s or Bare Metal Server are using the SD-WAN Edge as Gateway. NSX security is independently from the networking infrastructure and the NSX distributed firewall is sitting in front of every VM interface (vnic). This means the traffic can be secured between different VM`s and it doesn`t matter if the virtual machines are part of the same IP range or not.

If NSX Overlay exist a routing connection will be established between the SD-WAN Edges and the NSX Edges (see picture 3). It can be realized via BGP, OSPF or static routing. A NSX gateway firewall can be configured to secure the north-south traffic or the tenant traffic.

Picture 3: NSX Overlay

4. Operation and Monitoring

The SD-WAN Orchestrator (VCO) is taking care about the configuration and administration of the SD-WAN Edge boxes. The SD-WAN Orchestrator is available as SaaS service or on-premise. The SaaS service is much more easy to implement and administrate. Only “Meta data” will be send from the SD-WAN edge to the Orchestrator (VCO) over a encrypted tunnel for reporting and monitoring purposes. The VCO provides an active monitoring report on its Graphical User Interface using the data received from the Edge devices. Edge devices collect information like source IP address, source MAC address, source client Hostname, network ID, etc. The SD-WAN hardware edges which are placed in front of the medical devices can be activated from a none IT person due to a Zero Touch Provisioning approach (see picture 4). The process is realized in 3 steps. Initial step is that the IT Admin add the Edge to the VCO and creates an activation key. Second step is the device shipment and a email with the activation key from the IT Admin to the onsite person. Last step of the deployment is that the local contact plugs in the device with internet access and activates it with the dedicated key which has been sent via email. Afterwards the IT Admin has access to the Edge and can to further configuration to add the medical device.

Picture 4: Zero Touch Provisioning for the SD-WAN Edges

The whole solution can be monitored with vRealize Network Insight (vRNI). vRNI can discover flows from physical network devices (routers, switches, firewall), SD-WAN, virtual infrastructure (NSX, vSphere), Containers and Multi-Cloud (AWS, Azure). The tool provides troubleshooting features and helps a lot for security planning. Visibility is also available, see picture 5 with a packet walk from a dedicated source IP to a dedicated destination IP, the path details are very useful.

Picture 5: Path Topology with vRealize Network Insight (vRNI)

Summary

The solution is simple to install and operate. SD-WAN and NSX have a lot of automation in place from default and this makes it very flexible and scalable. The key feature of the solutions are:

SD-WAN

Access protection of the medical devices through MAC authentication.

No changes are mandatory on the medical device

Roaming of the devices from one edge to another, no configuration change is mandatory.

Zero Touch Provision for the edges

Separation of all ports on an Edge through VLANs.

Centralized administration.

Use of global policies for simplified administration.

Secure transport encryption from the edge to the data center

Hardware or Software possible (in the data center)

NSX

Protection within the data center with Distributed Firewall and Microsegmentation at the VM, container or baremetal server level.

Granular control of access on every VM

ESXi hosts do not require an agent

Firewall rules will be moved within the vMotion process.

Routing instances can be separated

NSX IDPS (Intrusion Detection Prevention System) with a distributed function available

Central administration and monitoring

ESXi hosts at remote sites can be centrally administered with NSX.

There is another brilliant tool named Edge Network Intelligence (formerly Nyansa) which is very interested for the healthcare sector in case of AI-Powered Performance Analytics for Healthcare Networks and Devices. I will create another blog post within the next weeks for this topic.

VMware Carbon Black Cloud Container Security with VMC on AWS

Dec. 22, 2020 VMware Carbon Black Cloud announced the General Availability for Container Security. It will protect your applications and data in a Kubernetes environment wherever it lives. In this blogpost I will explain how to add container security to an existing Kubernetes environment. Keep in mind, I am working in a Demo environment.

If you are interested in securing your Workload you can take a look on my previous blog, here.

For more information about K8s or TKG check out the blog from my buddy Alex, here.

What VMware Carbon Black Container Security provides?

In the first release it will provide you a full visibility and basic protection into all your Kubernetes Clusters wherever your clusters live, public cloud or on-prem. The provided visibility helps you to understand and find misconfigurations. You can create and assign policies to your containerized workload. Old Carbon Black fashion, you are also able to customize it and finally, it enables the security team to analyze and control application risks in the developer lifecycle. Enough of it, let’s jump directly into the action.

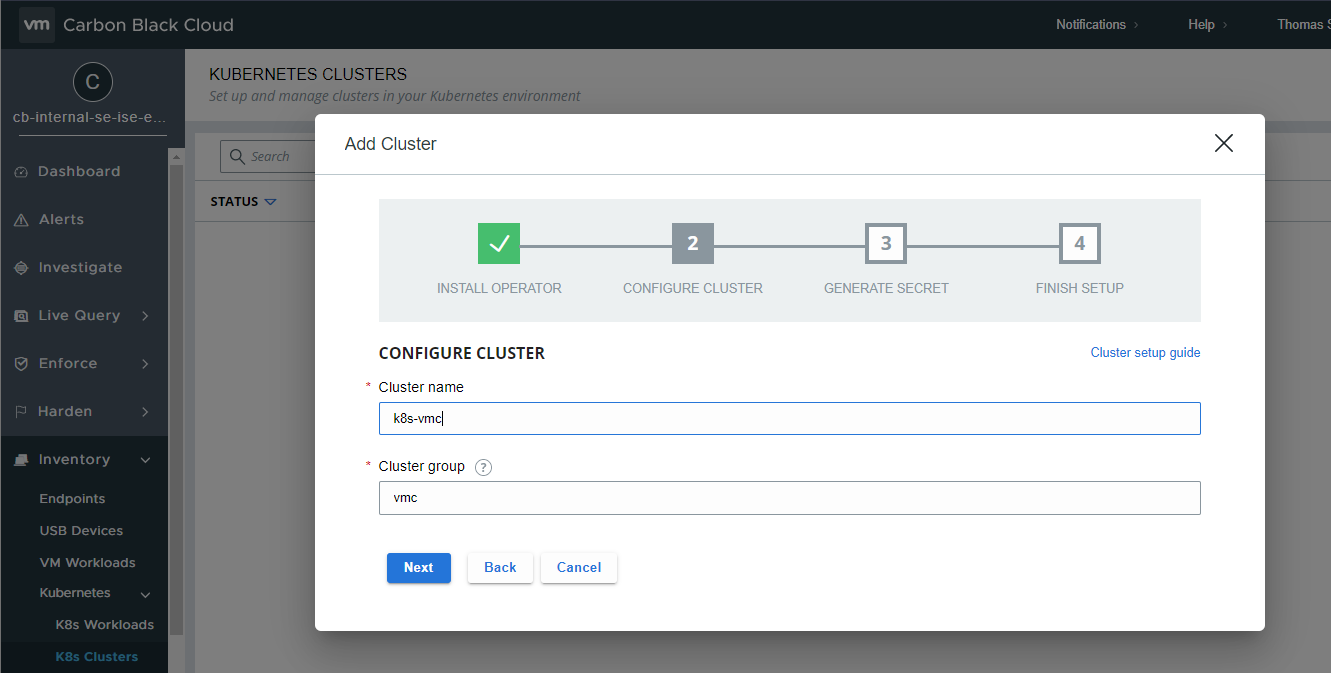

Let’s open our Carbon Black Cloud console. We can find in “Inventory” the new Tab “Kubernetes”. With “K8s Clusters” à “Add Cluster” we can add our existing K8s Cluster to Carbon Black.

Carbon Black provides us an easy-to-follow step by step guidance.

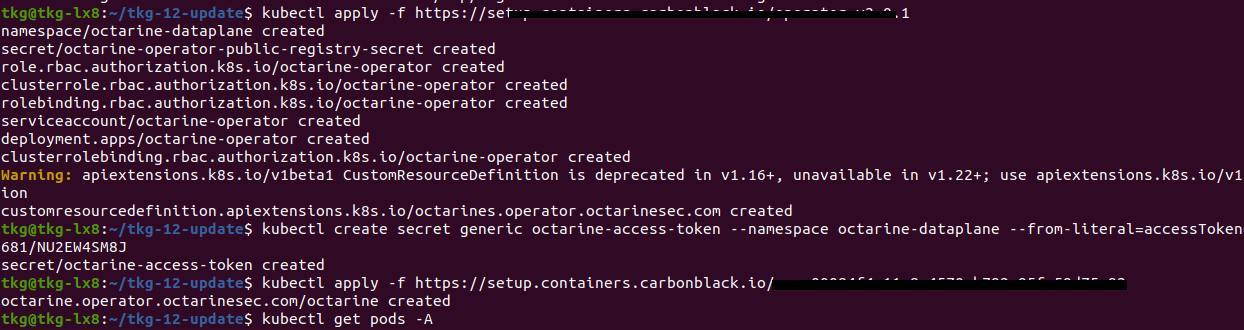

It’s providing us a kubectl command to install the Operator. Operators are Container which are handle all the communication and taking care about your K8s-Cluster.

We are already connected with our K8s-Cluster. We paste the kubectl apply command into the console and press ENTER. We can see with “kubectl get pod -A” command, all necessary components will be created. Meanwhile, we can continue in Carbon Black Cloud.

Our next step will be to name our K8s Cluster and add/create a Cluster group. For the Cluster name, we are free to use any name you wish, it just has to be unique. A Cluster group is to organize your multiple Clusters in Carbon Black Console. Cluster groups make it easier to specify resources, apply policies and to manage your Clusters.

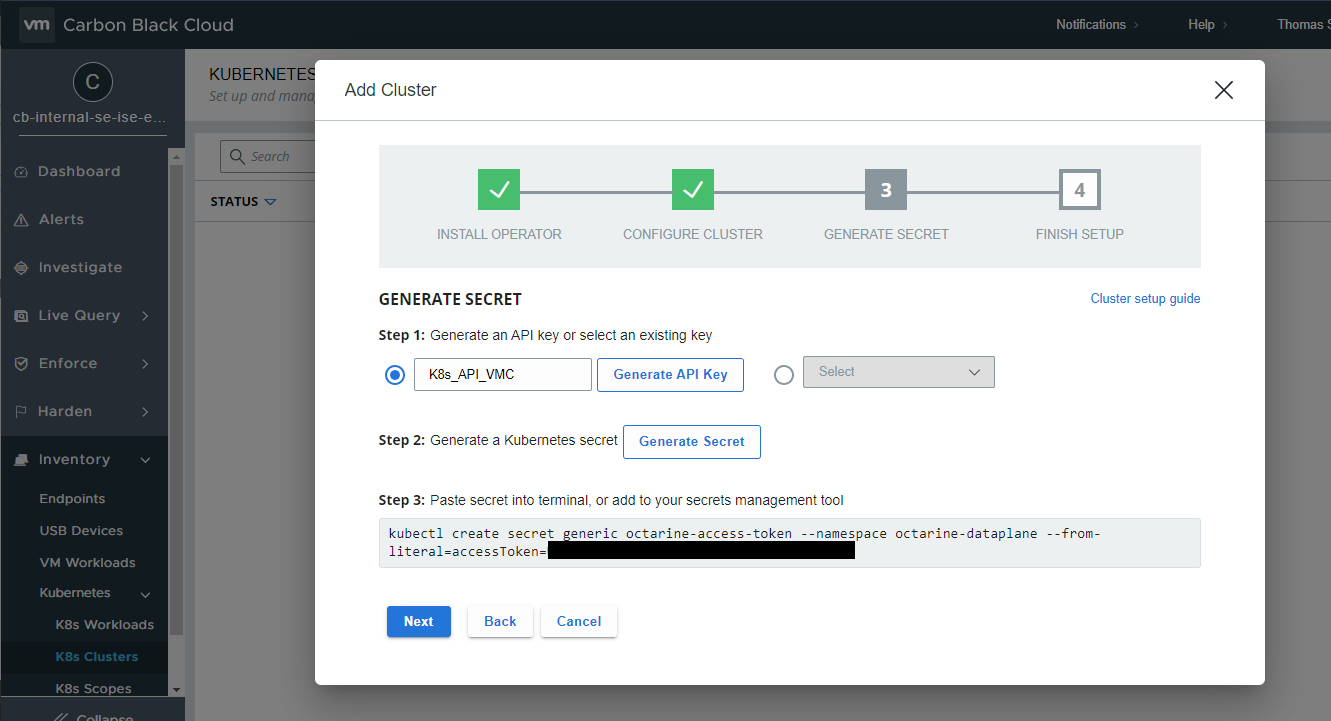

Let’s continue, for the next step we need an API token. We have to name it and with “Generate API Key” we are generating one with the needed permissions. After our Key is ready, we can continue to create our Secret for the K8s Components. Copy/paste the command into your kubectl console.

To finish the setup, we need to run another apply command, copy paste, and we are done. Now we will wait 2-3 Minutes, until the installed components will report back to the Carbon Black Console. Simple right?

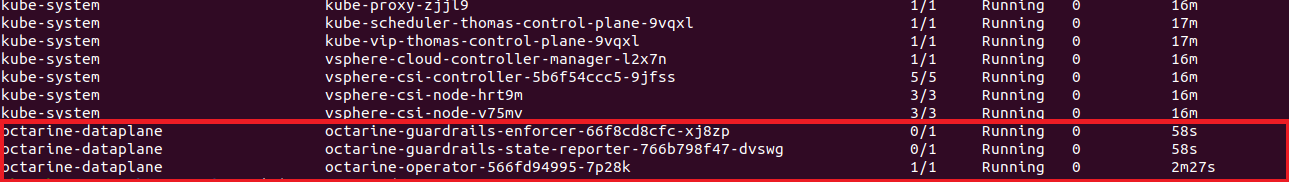

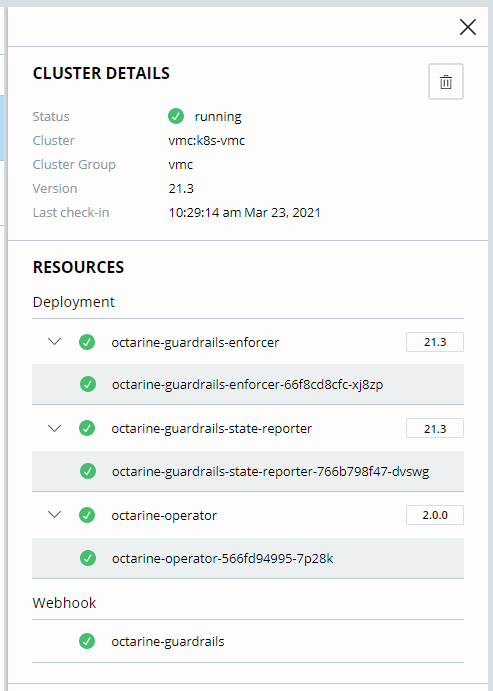

Let’s investigate the details. We can see the status is “running” and green.

Additional information’s like Name, Cluster Group, which Version is used and the last check-in. We also can see all Resources which are deployed, and which Version is used. When we type in our kubectl following command “kubectl get pods -A” we will see the same resources.

octarine-dataplane octarine-guardrails-enforcer-66f8cd8cfc-xj8zp 1/1 Running 0 25m

octarine-dataplane octarine-guardrails-state-reporter-766b798f47-dvswg 1/1 Running 0 25m

octarine-dataplane octarine-operator-566fd94995-7p28k 1/1 Running 0 26m

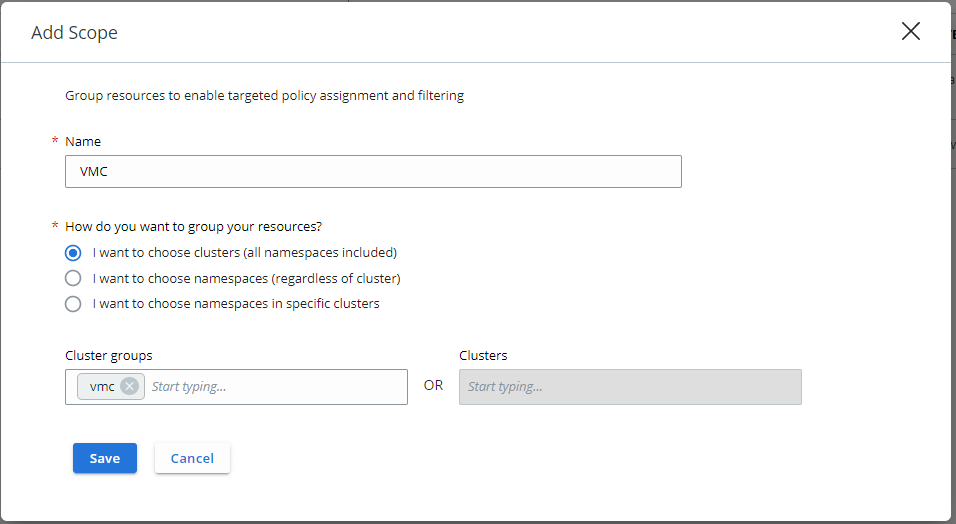

Great, next we can create a Scope, a Scope is important when we start to create Policies. To create a Scope, let’s click in the Carbon Black Console on the left side “K8s Scopes” and with one click on “add scope” we can create a scope.

We will define a very simple scope in our case we name it “VMC”. Next, we can decide how we want to group our resources, we have 3 options

I want to choose clusters (all namespaces included)

I want to choose namespaces (regardless of cluster)

I want to choose namespaces in specific cluster

We are choosing the first option, clusters and simply add our Cluster group to it.

Finally, our next mission will be to create a new Policy for our K8s Cluster. Let’s go to Enforce -> K8s Policies in the Carbon Black Cloud Console. With a click on “add Policy” we can create our first Policy.

As usual we have to name it, I will name it “vmc_basic_hardening”, we select our new created scope, and we are checking “include init containers”.

Next, we can select pre-defined Rules, all pre-defined rules are best practices from the community.

For a basic hardening I would choose following Rules.

Workload Security

Enforce not root – block:

Containers should be prevented from running with a root primary or supplementary GID.

Allow privilege escalation – block:

AllowPrivilegeEscalation controls whether a process can gain more privileges than it parent process.

Writable file system – block:

Allows files to be written to the system, which makes it easier for threats to be introduced and persist in your environment.

Allow privileged container – block:

Runs container in privileged mode. Processes in privileged containers are essentially equivalent to root on the host.

Network

Node Port – alert:

Allow workload to be exposed by a node port.

Load Balancer – alert:

Allow workloads to be exposed by a load balancer

Command

Exec to container – block:

Kubectl exec allows a user to execute a command in a container. Attackers with permissions could run ‘kubectl exec’ to execute malicious code.

In the next screen we can see an overview of all rules and how many violations we would get. It would be recommended to disable the rules if you want to apply on a prod env. with a lot of violations or add it to the exceptions. You should speak before enable the rules with the app-owners.

In the end, we confirm the policy and save it.

In the next screen we can see an overview of all rules and how many violations we would get. It would be recommended to disable the rules if you want to apply on a prod env. with a lot of violations or add it to the exceptions. You should speak before enable the rules with the app-owners.

In the end we confirm the policy and save it.

Now we can look on the Workload and what risk level we have. Let’s get back to Inventory -> Kubernetes -> K8s Workloads. You

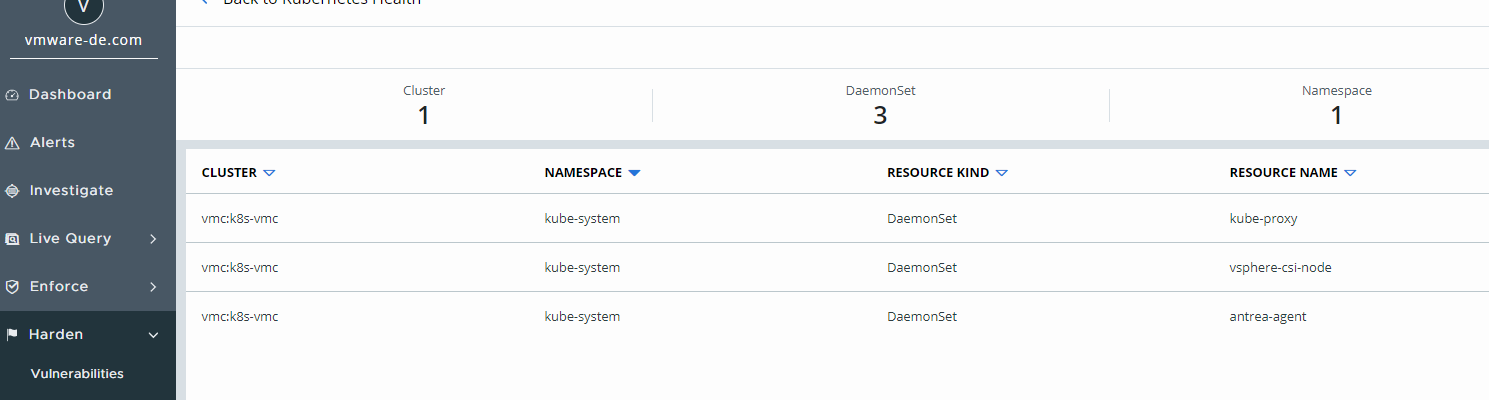

Let’s take a look on the health of our K8s Cluster, to do so, we go to “harden” -> “K8s Health”. We can filter by scope and see a great overview about our workload, network, operations, and volume.

By clicking on a specific topic like, “allow privileged container” you will see an overview about the Resource name, which cluster it is, which namespace and the resource kind.

Another overview can be found on “K8s Violations” you can see the specific Violation, what Resource is impacted, namespace, cluster scope and which Policy is applied.

To roll it up, it doesn’t matter what platform your K8s Cluster are running, but to run your K8s Cluster in a VMC on AWS Environment bringing a lot of benefits like flexibility and less maintenance. Carbon Black Container Security giving Security Teams and Administrators the visibility into the Container World back.

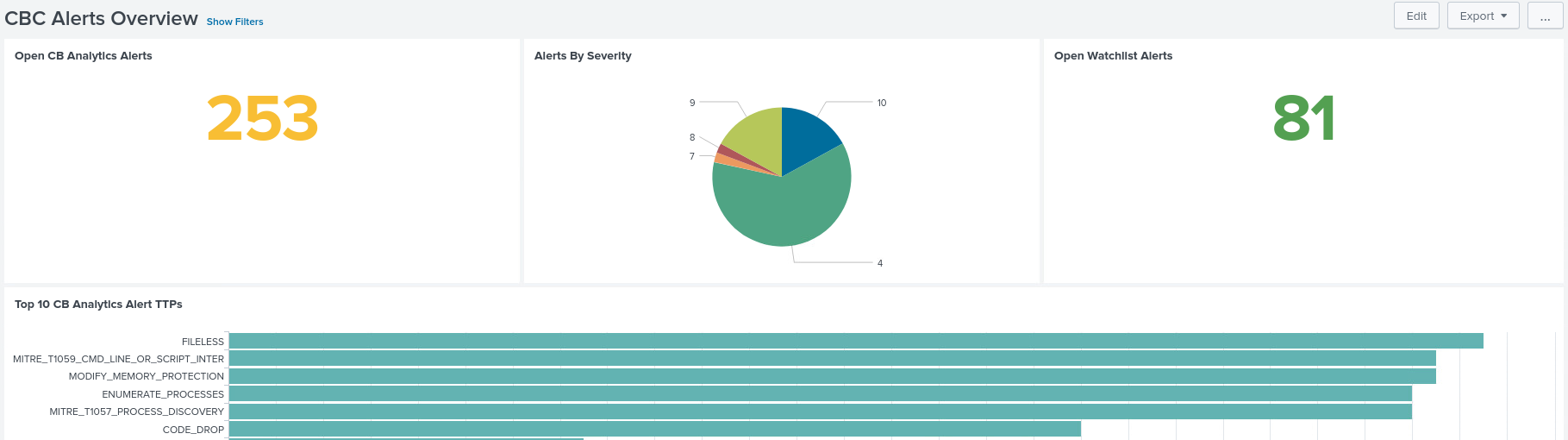

Carbon Black App For Splunk

Carbon Black has put a lot of effort into developing a new, unified app for Splunk that integrates alert and event data from workloads and endpoints into Splunk dashboards. In addition to that, it fully utilizes the Splunk Common Information Model, so we don’t have to deal with event normalization.

There are several good reasons to integrate Carbon Black events and alerts into Splunk. The most interesting use cases for me are data enrichment and correlation. I might get more into detail about this in a future post. Today, I’d like to focus on installation options and the setup.

Deployment Options

There are two options for getting Carbon Black Cloud (CBC) data into Splunk:

1. CBC Event Forwarder

2. CBC Input Add-On

Using the event forwarder (option 1) is the recommended way to get alert data into Splunk and - at the time of writing - it is the only way to get event data ingested. A setup would look like this (on a distributed environment):

This option has a low latency, while being reliable, and scalable. Therefore, it should be the preferred way for data ingestion, but since I don’t have an AWS S3 bucket, I’m going to choose the second option.

There is an excellent writeup available in the community and a solid documentation on the developer page if you want to learn more about the first option!

Installing the Carbon Black App for Splunk

Now, let’s get started. First of all, I’ll install the Carbon Black App for Splunk. I have a single on-premise server instance of Splunk, so I’ll just install the “VMware Carbon Black Cloud” app from Splunkbase.

When it comes to distributed Splunk environments, you’ll need to do additional installations on your indexing tier and the heavy forwarder.

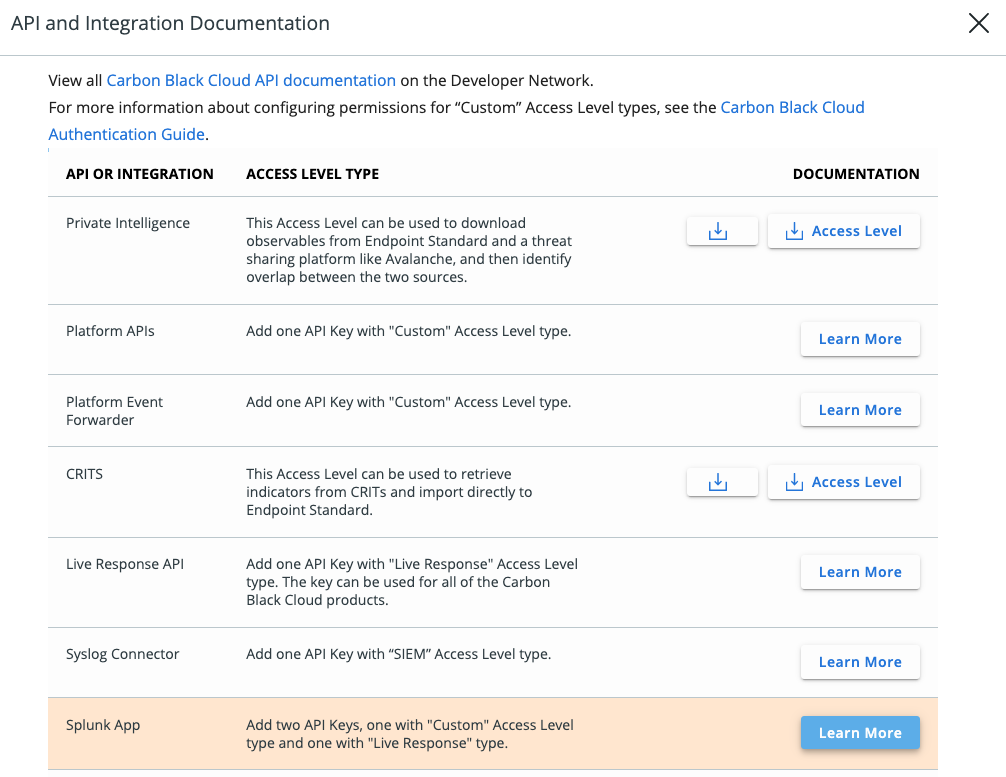

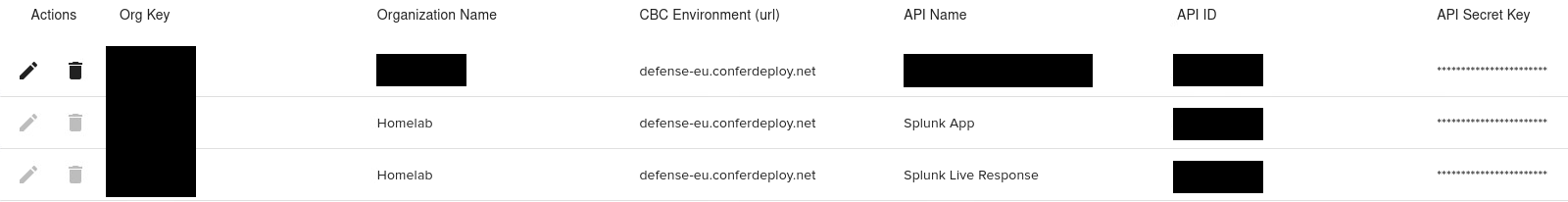

Creating API keys

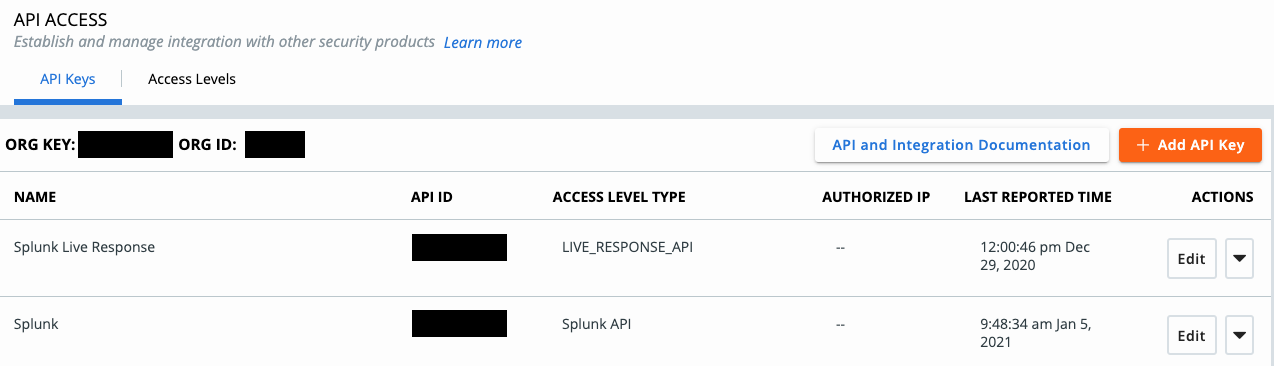

Splunk needs API access to receive data, but also for workflows and Live Response. To create the API keys I switch to the Carbon Black console and navigate to:

Settings > API Access > API Access Levels > Add Access Level

I named it “Splunk API” and assigned the permissions as in the table below:

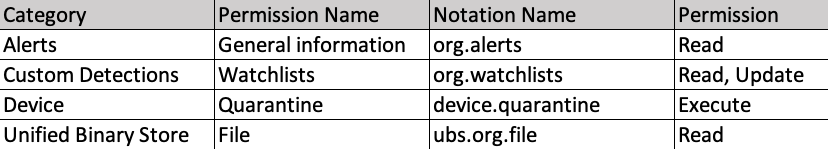

Once that has been done, it’s time to create two new API keys. The first one is using the custom access level, that’s just been created. The second API key is for Live Response, so the preset “Live Response”- Access Level can be assigned to it.

Setup Carbon Black App for Splunk

Moving on to the Splunk setup, the first thing is to open the setup page:

Apps > Manage Apps > VMware Carbon Black Cloud “Set up”

I recommend changing the base index to avoid the use of Splunk’s default index “main”. At this point in time, I had already created a new index called “carbonblack”, which I then set as the “VMware CBC Base Index” under the Application Configuration page.

On the same page, you can find a checkbox for data model acceleration. If you’re about to index more than a few GB of Carbon Black data per day, check this box. That’s not the case for me, so I’ll leave it by default and continue with the “API Token Configuration”-Tab.

The API token configuration is pretty straight forward, simply copy & paste the previously created API credentials for alerts and Live Response.

Because I’m using the Alerts API instead of the Event Forwarder, the “Alert Ingest Configuration” has to be configured and will allow us to set Loopback and Interval and to select a filter for a minimum alert severity.

To finalize the configuration I assign the API keys as follows:

NSX-T Upgrade to Version 3.1

VMware has announced the NSX-T Version 3.1 at 30th of October 2020. I want to describe within this blog post how to update from Version 3.0 to Version 3.1.

There are many new features available with NSX-T 3.1, like:

IPS (Intrusion Prevention System)

Enhanced Multicast capabilities

Federation is useable for Production. With NSX Federation it is possible to manage several sites with a Global Manager Cluster.

Support for vSphere Lifecycle Manager (vLCM)

Many other features has been also added to the new release. You can check details on the VMware Release Notes for 3.1: https://docs.vmware.com/en/VMware-NSX-T-Data-Center/3.1/rn/VMware-NSX-T-Data-Center-31-Release-Notes.html

My LAB has two VM Edge Transport Nodes configured with BGP routing northbound over Tier-0 and dedicated Tier-1 components (see Picture 1). I have a vSphere Management Cluster with two ESXi Hosts which are not prepared for NSX. The NSX-Manager and the NSX Edges are located on the Management Cluster. A Compute Cluster with two ESXi Hosts and one KVM Hypervisor are used for the Workloads.

Picture 1: LAB Logical Design

Before you start with the configuration you have to check the Upgrade checklist from the NSX-T upgrade guide. The upgrade guide is available on the VMware documentation centre https://docs.vmware.com/en/VMware-NSX-T-Data-Center/3.1/upgrade/GUID-E04242D7-EF09-4601-8906-3FA77FBB06BD.html and can be download as a pdf file also.

One important point from the checklist is to verify the supported Hypervisor Upgrade Path, i.e. in my LAB the KVM Ubuntu 16.04 was no more supported with NSX-T 3.1.

After you have verified the precheck documentation you can download the upgrade software bundle for NSX-T 3.1 from the VMware Download centre. NSX-T upgrade bundle size is 7,4 GByte. As always for security reason it is recommended to verify the checksum from the software.

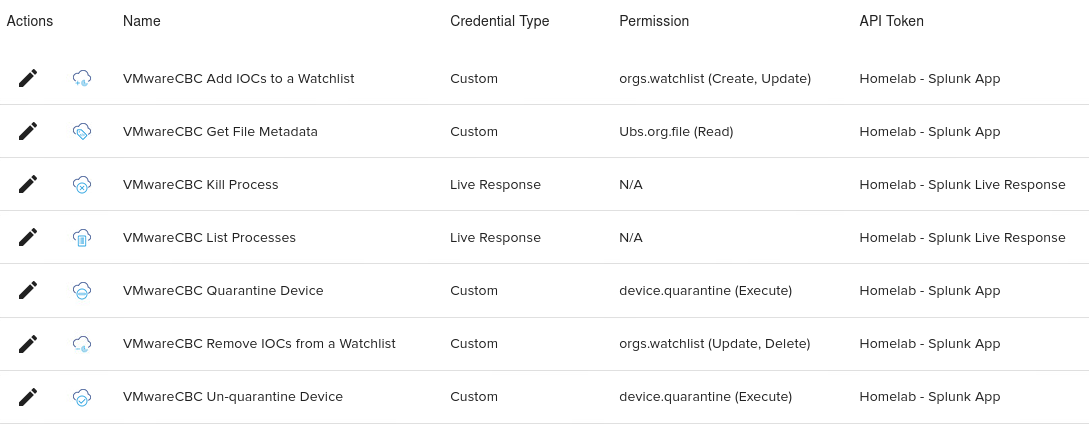

Now we want to start the migration. You need first to navigate to the NSX-T Manager GUI - System - Upgrade. The first step is to upload the installation file locally or from a remote location (see Picture 2). For performance reason locally should be prefered. It takes me about 10 minutes to upload the mub file locally.

Picture 2: NSX-T Upgrade

When the software is uploaded four steps are mandatory to finalize the upgrade:

Run Pre-checks

Edges

Hosts

Management Nodes

Run Pre-checks

Before we upgrade the components we need to run a pre-check first (see Picture 3). Pre-checks can be run overall or individually for Edges, Hosts and Management Nodes. The pre-checks can be export to a csv file. In my Lab the system has shown some warnings. For the Edges and for the NSX Manager I have received warnings that my root and admin password for the edges will be expired soon. The warning was seen within the NSX-T Manager alarms upfront also but I have not checked this before:-) For the Hosts I got the message that my KVM Ubuntu version is not compatible with NSX-T 3.1.

Picture 3: Upgrade Pre-check

Edge Upgrade

After the pre-checks have been verified the next step is the Edge Upgrade (See Picture 4). You can work with the serial or parallel options. Serial means to upgrade all the cluster upgrade unit groups consecutively. Parallel option means to upgrade all the Edge upgrade unit group simultaneously. Serial and parallel options can be set across groups and within groups. I have only one group because I have only one Edge Cluster. Within a Edge Cluster the order should be always consecutively. You can check the configuration process under details, there you get log entries from the installation.

Picture 4: Edge upgrade

Hosts Upgrade

The next steps of the migration is the Hosts Upgrade (see Picture 5). You can realize it also with the parallel or serial options. The maximum limit for a simultaneous upgrade is five host upgrade unit groups and five hosts per group.

Verify that ESXi hosts that are part of a disabled DRS cluster or standalone ESXi hosts are placed in maintenance mode.

For ESXi hosts that are part of a fully enabled DRS cluster, if the host is not in maintenance mode, the upgrade coordinator requests the host to be put in maintenance mode. vSphere DRS migrates the VMs to another host in the same cluster during the upgrade and places the host in maintenance mode.

Picture 5: Edge Upgrade

Management Nodes Upgrade

The last step is the NSX-T Manager Upgrade (see Picture 6). For my LAB I have only one NSX-T Manager in place and the process is quit forward.

Picture 6: NSX-T Manager Upgrade

Summary

The NSX-T 3.1 upgrade is really simple and needs not to be realized in one step. The update is possible with less or without downtime depending on your NSX-T design. My recommendation is to do the Edge and the NSX Manager upgrade in a mainteance window. The Transport Nodes can be updated afterwards in business hours. The serial and parallel option is also very helpful there.

VMware & Cloud Workload Protection

Hey everyone!

After the announcement and additional sessions at VMworld in september, VMware Carbon Black (The Security Business Unit at VMware) launched their Workload Security offering(s) last week. This is the next strategic step by VMware within their “Intrinsic Security” vision and strategy.

Cloud Workload Security or Cloud Workload Protection aka CWS/CWP/CWPP (2nd P for Platform) as an title is not really something “new” within the security market. When you look at the enterprise security landscape and some of the well-known security vendors, you will easily find out that CWP is the successor of legacy Server Security products and suites. In times of Physical, Virtual, Container, Serverless systems (or just Workloads) and equal components within most common data center and infrastructure environments it’s 1 of the most important topics Security Teams needs to address just right now to secure their IP.

When I was starting to write this blog post I thought about it how deep I should/I need to describe this specific topic in a fairly amount of time without getting too deep easily. But then, I found a blog post from the Carbon Black Team which describes most of my own ideas I wanted to share in a very good way. You may also found out already that I was stealing their article name somehow but it’s the perfect match for it! So, If you are new to all this or just want to refresh your knowledge, please take a look on this blog post by the Carbon Black folks, here -> Defining Cloud Workload Protection

Ok, I hope you’ve enjoyed reading the article, I’ve mentioned before! :)

Now, I think we’re good to go now to diggin’ deep and check out some details around this product launch!

The Cloud Workload Protection (CWP) module & adds new functionalities like Vulnerability Assessment, increased Data Center visibility and an “Agentless” approach to the VMware Carbon Black’s cloud-native Endpoint Security Platform called Carbon Black Cloud (CBC). Which already offers several security components around NGAV, EDR, Audit & Remediation features, using newest anti-malware methods like Real-Time Queries, Machine Learning, AI, Cloud Reputation, Data Enrichment and more against malware, advanced attacks and upcoming threats.

New features added by CWP within the Carbon Black Cloud Management console

Carbon Black Cloud Workload itself is a data center security product that protects your workloads running in a virtualized environment. It ensures that security is intrinsic to the virtualization environment by providing a built-in protection for virtual machines. After enabling the Carbon Black functionality in vCenter Server, you can view the inventory protected by Carbon Black Cloud Workload and view the inventory and risk assessment dashboard provided by Carbon Black Cloud Workload Plug-in.

You can now easily monitor and protect the data center workloads from the Carbon Black Cloud console. The Carbon Black Cloud Workload Plug-in provides deep visibility into your data center inventory and end-to-end life-cycle management for the components.

Carbon Black Cloud Workload consists of a few key components that interact with each other.

You must first deploy an on-premises OVF/OVA template for the Carbon Black Cloud Workload appliance that connects the Carbon Black Cloud to the vCenter Server through a registration process. After the registration is complete, the Carbon Black Cloud Workload appliance deploys the Carbon Black Cloud Workload Plug-in and collects the inventory from the vCenter Server. The collected inventory data is displayed on the plug-in Inventory tab and is also communicated to the Carbon Black Cloud console.

So far, so good. Let’s do some quick check now, on the available information & ressources! Here is a short overview:

the good thing:

All VMware vSphere customers have access to an extended trial version of VMware Carbon Black Cloud Workload Essentials. You can sign up for it HERE.

Check out the datasheet to get some details about it HERE. There is also a video available HERE: Introduction to VMware Carbon Black Cloud Workload (direct video link)

the not so good thing:

you need to be very quick to sign up for the free and extended Trail phase ;)

Some copy / paste content from the Trial FAQ (see full details at the link below) to light up the darkness:

"...

What is the deadline for sign up for the free trial? December 1, 2020.

After I sign up, how soon will I get access to the Workload Trial? The Workload Product Trial will be available November 2020. Eligible participants will receive email communications to let them know when the product is launched and how to download.

How long does the free trial last? The trial will be available through April 30, 2021.

..."

There are tons of information available already, but I was searching for the (hopefully) most useful content, which can be found below:

Additional Content & Details:

On-Demand Webinar - Future Ready Now: Securing Workloads

On-Demand Webinar - Securing Workloads on vSphere, VCF, and the Private Cloud

Technical Details:

VMware Docs: VMware Carbon Black Cloud Workload Documentation

VMware Docs: Installation Guide

VMware Testdrive: Introduction to Carbon Black Cloud Workload

Carbon Black User Exchange (Community): Onboarding with VMware Carbon Black Cloud Workload

Enough said! Now it’s time to try it out! :)

VMworld Network & Security Sessions 2020

VMworld 2020 will be taken place this year remotely from 29th of September 2020 until 1st of October 2020. I provide recommendations within this blog post about some deep dive sessions related to Network & Security sessions. I have excluded general keynotes, certifications and Hands-on-Labs sessions from my list. I have focused on none 100 level sessions, only in case of new topics I have done some exceptions.

Pricing

The big advantage of a remote event is that everyone can join without any travelling, big disadvantage is indeed the social engineering with some drinks:-) Everyone can register for the general pass without any costs. There is also the possibility to order a premier pass which includes additional benefits like more sessions, discussions with VMware engineers, 1 to 1 expert sessions, certification discount, etc.

VMworld Session Recommendations

Now I come to my session recommendations which are based on my experience from the last years and about topics which are interesting from Network and Security point of view. But first I have to say that every VMworld sessions is worth to join and for me it is the most important source to get technical updates, innovation and training.

NSX Sessions - Infrastructure related

Large-Scale Design with NSX-T - Enterprise and Service Providers [VCNC1838]

Enhancing the Small and Medium Data Center Design Through NSX Data Center [VCNC1400]

Deploying VMware NSX-T in Traditional Data Center Infrastructure [VCNC1766]

Logical Routing in NSX-T [VCNC1264]

NSX on vSphere Distributed Switch: Update on NSX-T Switching [VCNC1197]

NSX-T Performance: Deep Dive [VCNC1149]

Demystifying the NSX-T Data Center Control Plan [VCNC1164]

NSX Federation: Everything About Network and Security for Multisites [VCNC1178]

NSX-T Deep dive: APIs Built for Automation [VCNC1417]

The Future of Networking with VMware NSX [VCNC1555]

NSX Sessions - Operation and Monitoring related

NSX-T Operations and Troubleshooting [VCNC1380]

Deep Dive: Troubleshooting Applications Without TCPdump [VCNC1920]

Automating vRealize Network Insight [VCNC1710]

Why vRealize Network Insight Is the Must-Have Tool for Network Monitoring [ISNS1285]

Discover, Optimize and Troubleshoot Infrastructure Network Connectivity [HCMB1376]

NSX Sessions - NSX V2T Migration related

Migration from NSX Data Center for vSphere to NSX-T [VCNC1150]

NSX Data Center for vSphere to NSX-T Migration: Real-World Experience [VCNC1590]

NSX Sessions - Advanced Load Balancer (AVI) related

How VMware IT Solved Load Balancer Problems with NSX Advanced Load Balancer [ISNS1028]

Active-Active SDDC with NSX Advanced Load Balancer Solutions [VCNC2043]

Load Balancer Self-Service: Automation with ServiceNow and Ansible [VCNC1390]

NSX Sessions - Container related

NSX-T Container Networking Deep Dive [VCNC1163]

Introduction to Networking in vSphere with Tanzu [VCNC1184]

How to Get Started with VMware Container Networking with Antrea [VCNC1553]

Introduction to Tanzu Service Mesh [MAP1231]

Connect and Secure Your Applications Through Tanzu Service Mesh [MAP2081]

Forging a Path to Continuous, Risk-Based Security with Tanzu Service Mesh [ISCS1917]

NSX Security Sessions

IDS/IPS at the Granularity of a Workload and the Scale of the SDDC with NSX [ISNS1931]

Demystifying the NSX-T Data Center Distributed Firewall [ISNS1141]

NSX Intelligence: Visibility and Security for the Modern Data Center [ISNS2496]

Micro-Segmentation and Visibility at Scale: Secure an Entire Private Cloud [ISNS1144]

Best Practices for Securing Web Applications with Intrinsic Protection [ISNS1441]

Network Security: Why Visibility and Analytics Matter [ISNS1686]

Protecting East-West Traffic with Distributed Firewalling and Advanced Threat Analytics [ISNS1235]

Network & Security and Cloud

NSX for Public Cloud Workloads and Service [VCNC1168]

Cloud Infrastructure & Workload Security: VMwareSecure State & Carbon Black [ISWL2072 + 2754]

Investigate and Detect Cloud Vulnerabilites with VMware Secure State [ISCS1973]

Service-Defined Firewall Multi-Cloud Security Design [ISCS1030]

Azure VMware Solutions: Networking and Security Design & NSX-T [HCPS1576]

VMware Cloud on AWS: Networking Deep Dive and Emerging Capabilities [HCP1255]

NSX-T: Consistent Networking & Security in Hyperscale Cloud Providers [VCNC1425]

Intrinsic Security

Cloud Delivered Enterprise Remote Access and Zero Trust [ISNS2647]

Flexibly SOAR Toward API Functionality With Carbon Black [ISWS1095]

Remote Work Is Here to Stay: How Can IT Support the New Normal [DWDE2485]

Mapping Your Network Security Controls to MITRE ATT&CK [ISNS2793]

Transform Your Security to a Zero Trust Model [ISWL2796]

Intrinsic Security - VMware Carbon Black Cloud EDR

Become a Threat Hunter [ISWS2604]

Endpoint Detection & Response for IT Professionals [ISWS2690 + 2653]

VMware Carbon Black Audit and Remediation: The New Yes to the Old No [ISWS1241]

Intrinsic Security - VMware Carbon Black Workload

Intro to VMware Carbon Black Cloud Workload [ISWL2616]

Comprehensive Workload Security: vSphere, NSX, and Carbon Black Cloud [ISWL2618]

Vulnerability Management for Workloads [ISWL2617 + 2755]

Intrinsic Security - VMware Carbon Black Endpoint

Securing Your Virtual Desktop with VMware Horizon and VMware Carbon Black [ISWS1786]

VMware Security: VMware Carbon Black Cloud and Workspace ONE Intelligence [ISWS1074]

SD-WAN - VeloCloud

SD-WAN Sneak Peek: What`s New Now and into the Future [VCNE2345]

Users Need Their Apps: How SD-WAN Cloud VPN Makes That Connection [VCNE2350]

VMware Cloud and VMware SD-WAN: Solutions Working in Harmony [VCNE2347]

Seeing Is Believing: AIOps, Monitoring and Intelligence for WAN and LAN [VCNE2384]

Why vRealize Network Insight Is the Must-Have Tool for Network Monitoring [ISNS1285]

VMware SD-WAN by VeloCloud, NSX, vRealize Network Insight Cloud [HCMB1485]

Summary

There are a lot interesting VMworld sessions, also for many other topics like Cloud, End User Computing, vSphere, Cloud-Native Apps, etc. Do not worry if you missed some presentation, the recording will be provided usually from my colleague William Lam on GitHub.

Here you can find the slides and the recording from VMworld 2019 in US and EMEA:

https://github.com/lamw/vmworld2019-session-urls/blob/master/vmworld-us-playback-urls.md

Feel free to add comments below if you see other mandatory sessions within the Network & Security area.

Terraform blueprint for a Horizon7 Ruleset with VMC on AWS

In this blog post I will write about Terraform for VMC on AWS and NSX-T provider. I wrote over 800 lines of code, without any experience in Terraform or programming. Terraform is super nice and easy to learn!

First of all, all my test ran at a lab platform… Use following code at your own risk, I won't be responsible for any issues you may run into. Thanks!

We will use following Solutions:

Terraform Version 0.12.30

VMC on AWS

Terraform NSX-T provider

If you are completely new to Terraform, I highly recommend to read all Blog posts from my colleague Nico Vibert about Terraform with VMC on AWS. He did a awesome job in explaining!

So, what will my code do..?

My code will create several Services, Groups, and distributed firewall rules. All rules are set to "allow", so you shouldn't have any impact when you implement it. It should support you to create a secure Horizon Environment. After you applied it, you can fill all created groups with IPs/Server/IP-Ranges. But details later!

Before we start we need following Software installed:

My Repository can be cloned from here. I will skip the basic installation for git, go and terraform. I will jump directly to my repository and continue there.

First of all we need to clone the repository, open a terminal Window and use following command: git clone https://github.com/vmware-labs/blueprint-for-horizon-with-vmc-on-aws

tsauerer@tsauerer-a01 Blueprint_Horizon % git clone https://github.com/xfirestyle2k/VMC_Terraform_Horizon Cloning into 'VMC_Terraform_Horizon'... remote: Enumerating objects: 4538, done. remote: Counting objects: 100% (4538/4538), done. remote: Compressing objects: 100% (2935/2935), done. remote: Total 4538 (delta 1459), reused 4520 (delta 1441), pack-reused 0 Receiving objects: 100% (4538/4538), 23.88 MiB | 5.92 MiB/s, done. Resolving deltas: 100% (1459/1459), done. Updating files: 100% (4067/4067), done.

CD to the blueprint-for-horizon-with-vmc-on-aws/dfw-main folder, with following command: cd blueprint-for-horizon-with-vmc-on-aws/dfw-main

tsauerer@tsauerer-a01 VMC_Terraform_Horizon % ls -l total 88 -rw-r--r--@ 1 tsauerer staff 1645 Jun 10 10:04 README.md -rw-r--r--@ 1 tsauerer staff 30267 Jun 9 10:45 main.tf -rw-r--r--@ 1 tsauerer staff 172 May 29 08:35 vars.tf tsauerer@tsauerer-a01 VMC_Terraform_Horizon %

Let's test if Terraform is installed and working correctly, with "terraform init" we can initialize Terraform and provider plugins.

tsauerer@tsauerer-a01 VMC_Terraform_Horizon % terraform init Initializing the backend... Initializing provider plugins... Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

Success, Terraform initialized succesfully. Next we need to check if we have the correct nsxt-provider.

tsauerer@tsauerer-a01 VMC_Terraform_Horizon_Backup % t version Terraform v0.12.24 + provider.nsxt v2.1.0

Great, we have the nsxt provider applied.

I recommend to use Visual Studio Code or Atom, which I'm using.

I created a new Project in Atom and select the folder which we cloned from github.

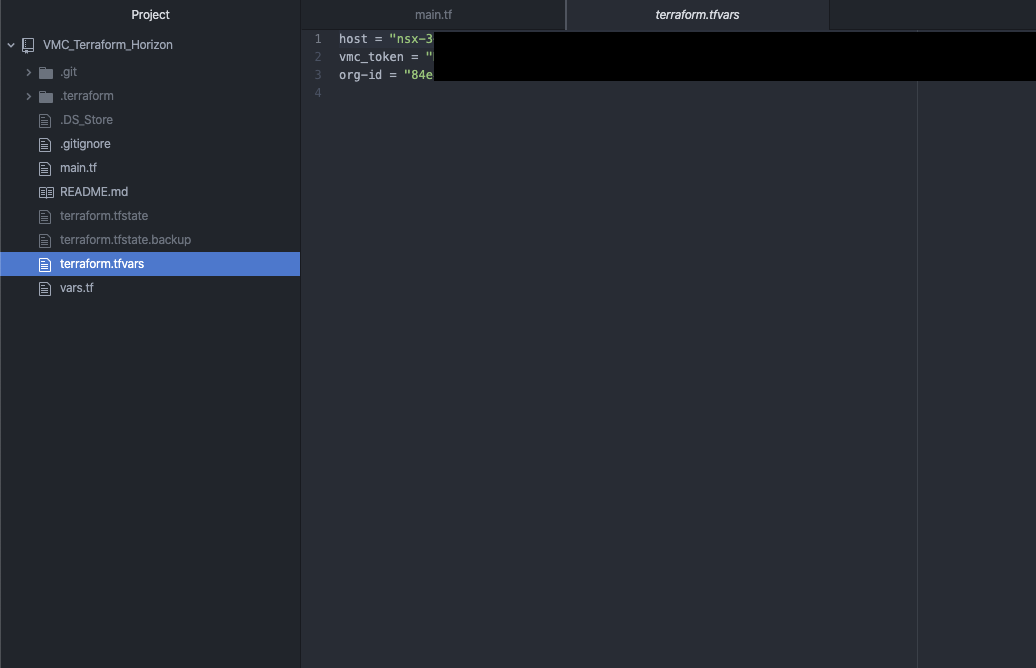

3 Files are importent, first "main.tf", here you can find the code or what will be done.

"Vars.tf", a description file for variables.

And the most importent file, which we have to create, because there, you will store all your secrets "terraform.tfvars".

So what you have to do now, you need to create a new file and name it "terraform.tfvars". For NSX-T we only need 3 variables, we already saw them in the "vars.tf" file. So let's add

Host = ""

Vmc_token = ""

Org-id = ""

Don’t worry I will guide you where you can find all the informations. Let's find the Host informations. “Host” is kind of missleading in the world of VMware, what we need here is the NSX-T reverse proxy. Let's go to your SDDC and on the left side you can find "Developer Center"

Go to "API Explorer" choose your SDDC which you want to use and go to "NSX VMC Policy API". On the left the "base URL" is your NSX-reverse proxy URL.

Copy the URL and paste it to your "terraform.tfvars" file between the quotation marks. Here a small hint, because it took me some hour troubleshooting, you have to remove the "https://".. So it starts just with "nsx……..”

Host = "nsx-X-XX-X-XX.rp.vmwarevmc.com/vmc/reverse-proxy/api/orgs/84e"

Next we need our API Token. This token is dedicated to your Account, to create one, go to the top right, click on your name and go to "My Account".

On the last tab "API Token", we need to generate a new API Token.

Enter a Name, TTL period and your scope. I guess you only need "VMware Cloud on AWS" "NSX Cloud Admin", but I am not sure. My token had "All Roles". Generate the token, copy your generated token and safe it in a safe place! You will not be able to retrieve this token again.

vmc_token = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX"

Last we need the org-id. Just go to one of your SDDCs and look at the "support" tab, there you can find your org-id.

org-id = "XXXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXX"

If you working with github, I would recommend to create a .gitignore and add "terraform.tfvars", so it will not be uploaded to your repository. Take care about this file, all your secrets are inside :)! In the end your file should have 3 lines:

Host = "nsx-X-XX-X-XX.rp.vmwarevmc.com/vmc/reverse-proxy/api/orgs/84e"

vmc_token = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX"

org-id = "XXXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXX"

Perfect, we finished the preparation! We are ready to jump into "main.tf" file.

I creating 24 Services, 16 groups and 11 Distributed Firewall Sections with several Rules, in the end you will need to fill the groups with IPs/Ranges/Server. I only focused on Horizon related services, groups and firewall rules yet, so if you want to have a allowlist firewall enabled, you have to add core Services, Groups and Firewall Rules like DNS, DHCP, AD etc. I will try to keep on working on my code to add all necessary stuff for a allowlist firewall ruleset, but for now it should give you a idea how to do it and support your work.

But lets start to plan and apply the code. If you closed your Terminal window, reopen the terminal window, jump to our location and re-initialize terraform, with “terraform init”.

With the command “terraform plan” you can review everything what terraform wants to do and also you can check if you created your secret file correctly.

you can see terraform wants to create a lot of stuff. With “terraform apply” you will get again everything what terraform wants to create and you need to approve it with “yes”. Afterwards you fired “yes”, you can lean back and watch the magic.. After some seconds you should see: Apply complete! Resources: XX added, 0 changed, 0 destroyed.

Let’s take a look into VMC after we applied our changes. First of all we created groups…. and we got groups!

next we need to check services…. and we got services as well!

Now we come to our Distributed Firewall. Bunch of sections are created with several Rules in each section. I only created allow rules and all groups are empty, so no rule should impact anything!

Success :)! We applied Groups, Services and several Rules including Groups and Services. If you have any trouble or think you want to get rid of everything what Terraform did, you can just simple go back to your terminal and enter “terraform destroy”. It will check your environment what changed or what needs to be deleted, and give you a overview what terraform wants to/will do. Approve it with “yes” and all changes will be destroyed. It take some seconds and you will see: Destroy complete! Resources: XX destroyed.

If you have any questions, problems or suggestions feel free to contact me!

Some ending words about Terraform.. Terraform is a awesome tool in the world of automation.. I had no experience with programming but it took me one or two weeks to get into it and I had so much fun to write this code! It is super easy and super useful! I hope this code will help you, save you work and will provide you as much fun as I had :).

Integration Druva Phoenix Cloud to VMC on AWS

Updated: June 16th 2020

This blog post is ONLY a showcase. I want to show you, how easy a SaaS Backup Solution can be! We will securely implement it and backup a VM.

In this showcase we will use following Solutions:

Druva Phoenix SaaS Backup Solution

VMware Cloud on AWS

Distributed Firewall

About Druva:

Druva is a software company specialized on SaaS-based data protection in one Interface. It is build and born in the AWS Cloud. One of the Product is Phoenix Cloud. Let's talk about some benefits from Phoenix Cloud afterwards we will directly jump in and get deeper into Phoenix Cloud.

Phoenix backup everything in S3

Phoenix automatically archive older Backups to Glacier

You only pay for Storage you consuming after deduplication and compression

One Console for all Backups around the world

Up to over 15 Regions where Phoenix Cloud is available

And that are just a few benefits.

Let's dive in..

After we login to the Phoenix Cloud, the Console, is a very clean overview about your consumption and your environment. Druva provides a secure, multi-tenant environment for customer data, each customer gets a virtual private cloud (tenant). All data will be encrypted using a unique per tenant AES-256 encryption key. Above and beyond all security features what Phoenix Cloud provides, let's not forget about Druva is build in AWS. AWS provide significant protection against network security issues. You will find the full whitepaper about security here!

The first thing what we want to do, create a new Organization. It can be because of separate Departments, Regions etc. By the way Druva got a great permission management, each department can take care about there own Backups.

To create a new Org. we have to go to Organization and on the top left "Add New Organization", Name it and you created your first Org!

Afterwards go to your Org and Druva through you directly into a "Get Started”. We need to select a product, in our case VMware Setup.

Next we need to download the Backup Proxy, because we want to install it on VMC on AWS we need to download the standalone Backup Proxy. Keep on track, there’s something coming soon ;).

While the download is running we need to generate a new activation token for the installation of the proxy. You can set the count how many proxies you want to install and an expire time.

Copy your token, you will need it for the installation.

Now, before we can start to deploy the Proxy we need to check the network on VMC. Let’s go to the Compute Gateway Firewall first.

Druva Proxy needs 443 access to the vCenter and Internet access. So we create following rule on the compute Gateway:

Source: Druva-Proxy -> Destination: vCenter with Port: 443

Source: Druva-Proxy -> Destination: Any with Port: 443 applied to: internet interface

On the Management Gateway we need to open Port 443 as well. In- & Outbound.

Druva only needs access to the internet and to the vCenter, so why we shouldn't not restrict all other communication.

I wrote a Terraform script to automate this step, it will create groups, service and a distributed firewall section with 4 allow rules and 2 disabled deny rules. Repo can be found here. You just have to fill the created groups (Druva_Proxy, Druva_Cache & if needed SQL-Server).

All my test ran at a lab platform… Use above code at your own risk, I won't be responsible for any issues you may run into. Thanks!

If you prefer to do it by your own here are the manual steps:

Let's go to our Distributed Firewall.

My Demo Environment is set to blacklist. So our first Rules are:

Source: Druva-Proxy -> Destination: ANY with Service: ANY -> Reject!

Source: ANY -> Destination: Druva-Proxy with Serivce: ANY -> Reject!

Right now each traffic will be blocked directly on the vNIC of our Druva Proxy.

Perfect! Next we need to allow Internet traffic. This is trickier, because we using our internet gateway and do not using a classic proxy.

So we creating a RFC1918 Group, which includes all private IP Ranges and we need a negate selection. If you have a proxy Server just allow https traffic to your proxy, that should do the trick!

Source: Druva-Proxy -> Destination: is not! RFC1918 with Service 443 -> Allow!

Last Rules, we have to allow vCenter out- and inbound traffic. So we need 2 additional rules:

Source: Druva-Proxy -> Destination: vCenter with Service 443 -> Allow!

Optional you can add ICMP.

Source: vCenter -> Destination: Druva-Proxy with Service 443 -> Allow!

That’s pretty much it, our Application Ruleset! What we could do on the Infrastructure DFW Level, we could allow basic stuff like DNS etc. But Druva do not need anything else!

I will skip the Backup Proxy installation, it is pretty straight forward, choose Public Cloud, VMware Cloud on AWS and do the basic setup, like IP, Token , NTP, vCenter and your VMC credentials.

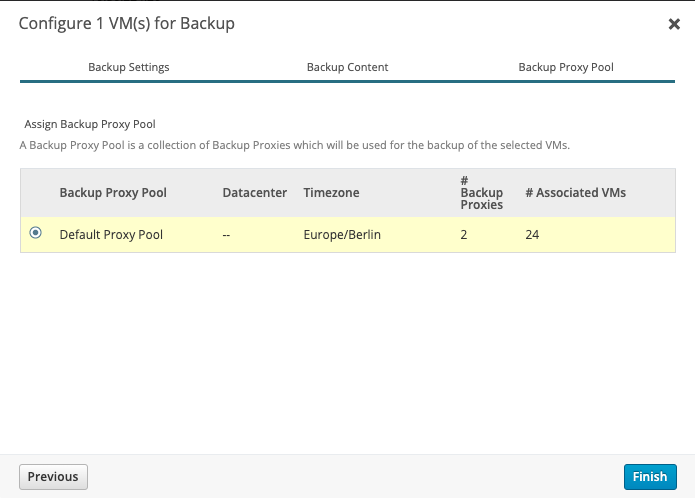

After the deployment is done, you will see your vCenter & VMs in Phoenix Cloud and also your Backup proxy is gathered in a Proxy Pool. With the latest version of the Backup proxy we are able to deploy new Proxies directly out of our Phoenix Console! Just go to your Backup Proxy Pool and hit deploy Proxies.

Choose your DataCenter & your Backup Proxy Pool, add as much as you want.

Configure the VM Network, a IP Range, Netmask, gateway and DNS Server. In my case I do not need any Proxy settings, if you using a Proxy just enable "use web proxy" and provide your information. Don't forget to add your newly deployed Proxies to your Firewall Group in VMC!

Now we have to create our first Backup Policy. You can find your Backup Policies via Manage -> Backup Policies. Let's create our own Policy with custom settings.

Create new Backup Policy -> VMware. First of all name it and write a description.

Schedule it, in our case each day at 02:00 am. Duration and your max bandwidth. You can separate weekdays and weekend, like me. On Weekend nobody works, so I extended the duration timer. It makes sense to ignore backup duration for the first backup. But I guess you know your Environment better than me.

Retention, in my case daily for 30 days, weekly for 24 weeks, monthly for 12 months and yearly snapshots for 10 years. Set it depending on your workload. I also enabled LTR (Long Term Retention). LTR automatically move all cold tier backups to Glacier.

Next some specific VMware Settings. Auto-enable CBT, VMware tools quiescing and application-aware processing.

That's it! Meanwhile you should notice in your Phoenix Cloud Console, your Proxy communicates with Druva Phoenix Cloud and you see some Information of your vCenter. Next we need to configure VMs for Backup. Let's go to Protect -> VMware. Here you have an overview of # Total VMs, Configured VMs and your Backup Proxy Pools/# Backup Proxies.

To configure a VM we go to your vCenter/Hypervisor and select 1 or multiple VMs and select Configure VM for Backup.

Choose your Storage, in my case eu-central 1 (Frankfurt DataCenter) a Administrative Group (useful to organize/management purpose) and your Backup Policy what we created earlier. In my case BlogPolicy, if you have more Backup Policys, you always can see the Details after selecting a Backup Policy.

Next, you can exclude disk names, in my case we do not exclude disks, as an example could be useful for Database Server.

Select your Backup Proxy Pool and you good to go. Your Virtual Machine is now configured!

You will find your VM in "Configured Virtual Machines". To test it lets start a backup now. Select it, hit "Backup Now" and choose yes you really want to start now.

You will find your Job in Jobs -> VMware.

For detailed information you can press the Job ID and you can see a Summary and Progress Log, if something went wrong you can also download detailed logs here.

Above you can see the result! Our first Backup of our VM, we transferred nearly 19GB, with a speed of 196 GB/hr and the Backup Duration was under 10 minutes.

Some closing words, Druva Phoenix Cloud is a great SaaS Backup Solution! It is easy to use and on the other hand very detailed. Druva engineered a next-gen solution, which brings the backup world to the next Level.

I had the chance to get in contact with pre-sales, sales, support, engineering and product management. It was a pleasure for me, you felt in each of them, the love and passion for the product/solution.

Special Thanks to Martin Edwards, Saurabh Sharma, Anukesh Nevatia and the rest of the Druva Team!

VMware Carbon Black Cloud for Endpoint Security

The VMware Carbon Black Cloud is a cloud-native endpoint protection platform (EPP) that provides what you need to secure your endpoints, using a single lightweight agent and an easy to use console.

VMware Carbon Black provides:

Superior Protection

Actionable Visibility

Simplified Operations

We will secure our VMC on AWS Horizon Environment with Carbon Black endpoint protection.

First let's take a look on the Console. It is a web-based Console hosted in a AWS Datacenter (in different GEOs). You can login via SSO or E-Mail and Password, as well as 2FA with DUO Security / Google Authenticator. The Dashboard give you a good overview about what is going on, any events or issues, which can easily filtered per policy or day/weeks.

Carbon Black have a really great community, check it out! https://community.carbonblack.com/

Let's start to get CB rolling and define a policy group. In a policy group you can define all kind of settings, what should happen if something get's detected, or just simple things like, when should the system get scanned. To create a new Policy let's get to enforce -> policy.

Add a new Policy, name it, add a description and copy setting from the standard Policy Group. You are able to customize the message which the User will see, when carbon black blocked anything. In my case a picture of Carbon Black and they should contact me.

<table><tr><td><a href="https://www.carbonblack.com/"><img src="https://media.glassdoor.com/sqll/371798/carbon-black-squarelogo-1528288481334.png" width=32 height=32></a></td> <td> Contact Thomas Sauerer, he is your admin</td>

Let’s go to the next tab “prevention”. We can allow Applications to bypass Carbon Black so the client is able to use it. A common Application is Powershell. So we need to add a new Application path, enter the application path “**\powershell.exe” and select bypass if any operation are performed.

Blocking and Isolation, here we can decide what will happen if any known malware will be detected. In our case we want to terminate the process if it is running or start to run. Keep in mind you always have the ability to Test each single Rule, so you are able to test everything in a secure way without any business impact!

Next we can block Applications who are on the Company blacklist. Here we can be more specific. You are able to deny or terminate the communication over the network or you want block it if it injects any code or modifies memory of other processes. Like I mentioned before, test your rules, use this awesome feature!

Adware or PUP and Unknown application I choose to terminate process if it’s performs any ransomware-like behavior.

You also can change the local scans, On-Access File scan, frequency and more. Keep it in mind if you have to exclude any on-access scans on specific files/folders. You can change your Update Servers for offsite and internal devices. That is important if you have any mobile devices who are not directly connected. For different Regions it would make sense to change it to a local update server. Update-Server are reachable via https as well, just change it to https.

On the last, "Sensor" you can edit the sensor settings of the client. I will deploy CB to our demo and test environment, in this case I allow user to disable protection. Usually you will not allow the User to disable the security! Guess what, if user can disable it most of them will do.. Here you are able to activate the Sensor UI: Detailed message, which we defined earlier.

Next what we need to do is to create an Endpoint Group. In Endpointgroups you can define different policys or criteria to seperate different Workloads and assign them automatically to a policy. To do this, you have to go to "Endpoint" section on the left side and add a new group.

It makes sense to separate different Workloads as Horizon, WebServer etc. You can set different criteria like IP Range or Operating System to automatically add the Server to different Endpoint Groups.

Last step, we need to install the Carbon Black Sensor. Basically it should make sense to add the Sensor directly to the basic Images and also define a Default/general Endpoint Group where all clients are added with a basic ruleset. When you change, as an example, the IP address from the Server it will automatically update the Endpointgroup and add the Server to the new Policy Ruleset. In my case I will just install the Sensor manually.

To download the Sensor we need to go to Endpoints -> All Sensors on the top right you will find Sensor Options -> Download Sensor kits.

Run the installer on the target system, agree the terms and enter the License Key. We are done, the Sensor is installed! Take a look back to the Console "Endpoints", you can see now the VM automatically added to the correct group and policy.

IDS/IPS with NSX-T

VMware has announced a new NSX-T Version 3.0 on 7th of April 2020. This version is a major release with many new features. VMware added several new function in the areas Intrinsic Security, Operations, Multisite (Federation), Containers, Load Balancing Enhancements, VPN Enhancements, Routing, Automation and Cloud connectivity. I want to take a closer look within this blog post to the Intrusion Detection System/Intrusion Prevention System (IDS/IPS) feature.

Most companies are using IDS for the east-west security inside the data centre and IPS for the north-south security. For the release NSX-T 3.0 IDS is available, IPS will be provided with future releases.

What is a IDS/IPS system?

IDS/IPS systems can be implemented in hardware or software. IDS/IPS system protection is against vulnerabilities exploits which can be done as malicious attack to a application or service. Attackers use this to interrupt and gain control of the application. IDS/IPS technology rely on signatures to detect attempts at exploiting vulnerabilities into the applications. This signatures are comparable to regular expressions which compare network traffic with malicious traffic patterns.

IDS (Intrusion Detection System) is like the name already says an monitoring system and IPS (Intrusion Prevention System) is a control system which blocks the traffic in case a attack is detected. This means with IPS you have the risk of false positives.

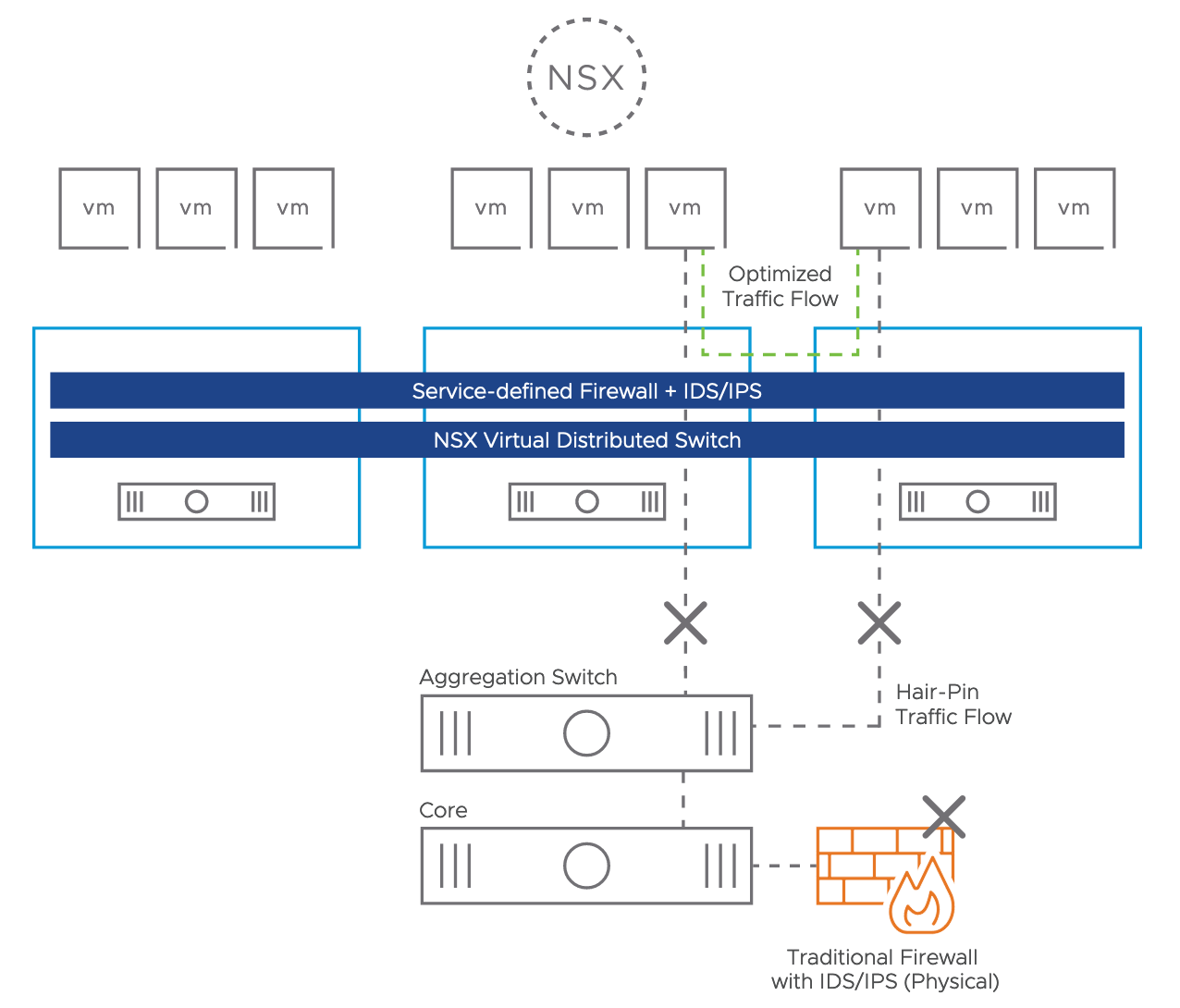

How IDS runs with NSX?

As already mentioned with NSX-T 3.0 release VMware announced IDS (Intrusion Detection System) with NSX-T 3.0. IPS function will be provided in the next months.

IDS is hypervisor based and is sitting in front of the vNIC on the ESXi host (see picture 1). The design is based on the NSX DFW (Distributed Firewall) concept. No agent is necessary, the communication is realized via VMware tools. A VMware VIB (vSphere Installation Bundle) will be rolled out for the host preparation. With this technic you avoid hair-pin because instead of traditional firewall with IPS/IDS the function is covered directly on the host level without any dependency to the network or any IP address ranges.

The signatures will be provided from the cloud service provider Trustwave directly to the NSX Manager. For this purpose the NSX Manager needs internet access, offline downloads are also supported. The signature updates could be provided immediately, daily or bi-weekly.

Picture 1: IDS System Hypervisor based

What are the use cases for IDS with NSX?

DMZ (Demilitarized Zone)

NSX IDS has the possibility to establish a DMZ (Demilitarized Zone) in software. One approach could be to realize this completely on the virtualization level or another choice could be to use dedicated ESXi Hosts for the DMZ. The NSX Distributed Firewall (DFW) and the Distributed IDS allow customers to run workloads centralized for different tenants.

Detecting Lateral Threat Movement

Usually the initial attack is not the actual objective, the attackers try to move through the environment to reach the real target. The NSX Distributed Firewall (DFW) with Layer-7 App-ID features helps there a lot that the attacker could not exploit the attack. For example the ransomware attack “WannaCry” is based on port 445 and 139 with SMB and could not be avoided with NSX DFW. With IDS technology the attack could be detected and would not be moved to other machines.

Replace physical IDS Systems

Another use case could be to remove the physical firewalls or IDS systems and replace it with NSX.

Meet regulatory compliance

Many data centre workloads have Intrusion Detection System (IDS) requirements for regulatory compliance, i.e. sensitive Health Insurance Portability and Accountability Act (HIPAA) for healthcare, and the Payment Card Industry Data Security Standard (PCI DSS) or the Sarbanes-Oxley Act (SOX) for finance.

How does IDS work with NSX?

The installation effort and the operational overhead is low when NSX is already implemented. There are only a few steps necessary to get the IDS function up and running.

1. Configure IDS Settings

The NSX-T Manager needs internet access to download the signatures, it could be done with auto-updates or manuel. It is also possible to define an Internet Proxy (HTTP/HTTPS). The VIB (VMware Installation Bundle) rollout can be realized via cluster or standalone ESXi Host (see picture 2).

Picture 2: Configure IDS Settings

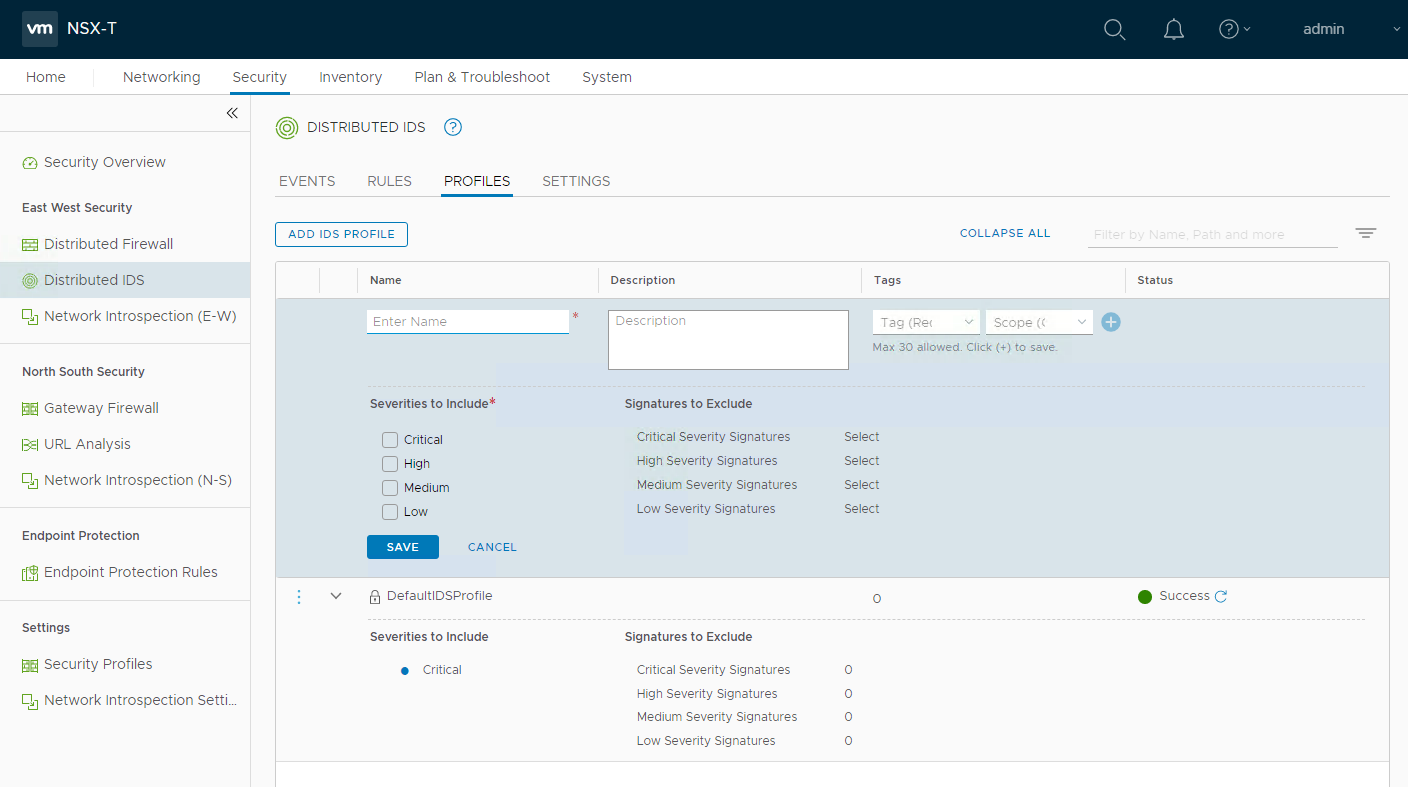

2. Configure IDS Profile

The second step would be to configure an IDS Profile if you do not want to use the default profile (see picture 3). During this step Severities can be defined from Critical, High, Medium to Low which are based on CVSS (Common Vulnerability Scoring System) score.

Picture 3: Configure IDS Profile

3. Configure IDS Rules

The last IDS configuration step will be to create an policy with IDS rules (see picture 4). The administration of IDS rules are very similar to the DFW firewall rules. A configuration of a IDS rules includes name, sources, destinations, services, IDS profile and the applied to field.

Picture 4: Configure IDS rules

4. Monitor IDS Events

When IDS is configured the events can be monitored over the dashboard which is visible below in picture 5.

Picture 5: Monitor IDS events

Summary

IDS (Intrusion Detection System) is another major step for NSX within the Intrinsic Security area. IDS in software, Distributed & Built-in Analysis, no hair-pinning traffic, meet regulatory compliance, fits DMZ requirements, no lateral thread movement and simple operations are all arguments to validate this new feature.

5 Security Trends for 2020 you better start following

No 1: Democratization of Security

Security is nothing that belongs to Security Teams exlusively “anymore”. Security must be adapted in every single moment in the infrastructure. The faster we accept it, the faster it wiil speed up your business. Security must be the guardrails that will protect our operations.

Different Topics can be placed to make that real, just to name a few:

Visibility

Assets, Data, Flows, Hashes, Ports&Protocols, Vulnerabilities. Your are building your infrastructure, you are responsible for your application and maybe you are building your software. You should know your infrastructure better than anybody else. And because it’s yours!What is the most important thing on your checklist? Usability, everybody needs Tools that are easy to use, that have acceptance and make fun to work with. Nobody needs one week training to work with a new tool. However you define it, but Usability must be a Topic on your checklist next to all the requirements that the product needs to fullfil.

What is the second most important thing? Look for non-siloed driven solution. The solution has to be consumable from more than one department. For Example: Communication Flows needs to be analyzed by Application Owner, by Security Analysts and Network Experts, of course it is about connectivity, but it is also about needs and guidelines. There is a business to run and that’s why the IT in gneral can enable the business or even make the the business faster and needs to work together. Simple and Secure.

Automation - there are two (at least) things that drives Automation

React fast on new Attack Vectors? Can you easily stop/disable specific Ports&Protocols for parts or the whole datacenter? You need to have automation to make this possible. Sure, in this case it is configuration management and you use automation to make this possible.

Still working with a checklist to add security after the workload was created?

Consider your Security Concept and Guidelines the moment you intantiate your workloads.

Example 1: You need to add another webserver for dedicated application, then you will apply the security settings that are defined for the existing webserver, which is normally the same security group/tag.

Example 2: You need to add a new workload from a template and staging will define the category afterwards. Place your workloads in staging security group, where you have access to install applications, central it-services and to update security tools like Update-Server or Endpoint Security solution (if they need that).

Zero Trust

Zero Trust is a framework that needs to be translated into your organization. From a “trust but verify” to “never trust/,always verify” approach. This model considers all resources to be external and continuously verifies trust before granting only the required access. It should be End-to-End, that’s why your organization should be aware of risk and trust. Have a look at No. 3, as Zero Trust is a main topic.

Security is a Team Sport!

No 2: Real-Time Vulnerability Assessment

Is Software more vulnerable today? This Question came up in March 2018 from the European Union Agency for Cybersecurity (ENISA). Edgescan provided the Vulnerability Statistic Report (2019) where the also looked back in 2018, where 81% Vulnerabilites came from the Network and 19% Vulnerabilities came from Layer 7. The most critical ones are coming from the Application / Layer 7.

Who is responsible for Vulnerability Assessment? Security. Scan the Environment every 10-15 Days and provide a Report to the Infrastructure responsibles and make them patch the systems. The number of vulnerabilites grow faster than ever (more devices/applications) and that’s why we need a new approach.

Real-Time Vulnerability Assessment. Everybody needs to be aware if there are vulnerable version, mostly it’s just patching. Real-Time Assessment is also reducing Performance as a service is running initially and watch out for deltas. A risk score provides informations to patch by priority. Patching can be time consuming, that’s why you need to know what to patch first.

No 3: Zero Trust (Framework)

Zero Trust is one of the most used Buzzwords when it comes to Security right now, as it is used also for almost everything. You can find different sources and the most accurate starting point is John Kindervag of Forrester in 2010, where we need to find model to relies on continuous verification of trust. From the definition on wikipedia: Zero Trust is an information security framework which states that organizations should not trust any entity inside or outside of their perimeter at any time. It provides the visibility and IT controls needed to secure, manage and monitor every device, user, app and network being used to access business data. It also involves on-device detection and remediation of threats.

Especially when we speak about End-to-End Security, it is necessary to consider anything that is involved the communication: Identity (User), Device, Applications, Connections, Accessing Services, Accessing Data and anything that the connection opens for that, as requests to a web server, also open new connections to apps and then databases.

The most challenging point is potentially to consider the framework in your collection of providers to provide secure software + hardware. A handful companies could help you to provide end-to-end visibility and powerful security controls to reach a zero trust / always verifiy model withoud slowing down or stopping the business.

The combination of security controls and security products will provide a better security, which stands also for democratization of security. You need to understand Identity authority and how to manage endpoints to fully secure Users/Devices. The combination of the Unified Endpoint Management “Workspace One” and the Endpoint Security “Endpoint Standard” powered by VMware Carbon Black is a perfect example. The unified device management seems to be unique on the market, as there are features like identity and device trust. Who is trying to authenticate and how, on which device? Company owned, bring your own and how are you connected? Home WiFi, public Hotspot. Whatever it is at the end, all these paraemeters can be used to define the trust.

Let’s take another look into the datacenter. The place where we see anything legacy infrastructure and modern application development or 10-15 years of infrastructure. Whatever it is at the end, does not matter. What matters at the end is? Do not add Security controls at the end. VM Templates based Operting Systems without knowing what the Workload will do at the end?! That’s the reality and stands for: Security rules and sensors will be added almost to the end.

Encryption

Endpoint based Encryption has to be as flexible as the user. Working at home, working in a public cafe or at the beach. Wherever you are, from a usage and connectivity point of view it should feel like you are at the office. But your aren’t. Can somebody spy your screen, should you really access critical data? Your company is using already cloud services? It make sense to make a direct connection to the cloud based services instead of connecting to the company and then to the internet. There are many things to consider if you are “outside”. Secure app data at-rest and in-transit with AES 256-bit encryption.

No. 4 East West Security

East-West Traffic often used to define the communication in the Datacenter, as Application speaks to Applications and Applications access Data. If we speak about Datacenter, then it is on Premises and Cloud. There are different methods that are used to address East-West security:

Microsegmentation / Zoning

Shrinking the attack Surface, stop Lateral Movement, VLAN Security, DMZ Security, Flexibility (your own Firewall, your own Ruleset) for your area, Built your Security concept into the infrastructure - no Permit A38 (Asterix and Obelix) add-on Security - there are different reasons why you need to start working on a flexible Zoning Concept. It’s mostly Automation, Speed and Flexibility. “Workloads needs to be secure the moment they are intantiated” is phrased in Gartner’s Cloud Workload Protection Framework. You don’t what to have an OS Template that needs to be updated first and add security afterwards. You know how do you want to secure your web server, why should you wait and add security in last step, after the machine was days in your infrastructure without any or limited security. You don’t what to be hacked and watch how parts of your datacenter is killed. By the way, if you do it right, it is a good exercise to enable automation and bring more speed into your infrastructure.

Service Mesh

Principles of application development are pushing where to defined/configure connectivity. Service Mesh is a good example how to add sidecars as a dataplane (service proxies) to have a layer between application control plane and the application itself. There are benefits with Service Mesh are not only Security a la Authentication, encryption and authorization, it is also about Visibility = Monioting, Logging & Tracing and Routing = Connectivity and Canary Releasing

Encryption

Compliance Report: TLS based Encryption (1.2 / 1.3) is the standard encpytion for application communication. Is it? Not? Why? We are running for a decade of infrastructure and not every application is capable of using TLS based encryption. You need a solution to make a compliance report to see hat why encryption you are using.

East-West Encryption: You can not change your application and bring the encryption to the newest / highest level? App Encryption is capable to take the data stream before it hits TCP/IP and encrypt traffic and decrypt traffic on the other end. If the application is using already SSL, the App Encryption is using e.g. TLS 1.2 over SSL.

No. 5 XDR

eXtended Detection & Response stands for visibility and the correlation of data that is used end-to-end. A perfect topic to close the 5 Security Trends you better start following, as all four Topics are part to fullfil the security end-to-end:

Security is organization driven, awareness and knowledge, most important: Security controls are adapted in and based on the business

Your assets needs to be secure and needs to be updated whenever necessary. It is more important how vendors react on vulnerable versions than having vulnerabilities.

Zero Trust is not a button to switch, you need to understand how and how to design the framework and adapt it for the business you are running. Consilidation and reducti0n of vendors / security controls is necessary to be more efficient to fullfil that

East-West Security is important right now and it was treated neglectfully over years. Perimter is important, but will not help you with everything. Transport Controls needs to be possible from East-West to North-South.

Keep in mind: XDR could be a Session Recorder, for End-to-End Visibility. A Topic that will be stressed more and more, and here we see how the organization needs to work together to have the big picture and use that to start reacting, learn and/or change prevent controls. Sames as Zero Trust, only a handful companies have potentially the capabilities to deliver it.

vExpert Program